FIDO2 is growing as a solution to the password hell we all know too well by replacing passwords stored in brains with secret keys stored on dedicated hardware security keys. This new protocol brings a huge increase in security and in usability, but the security key poses an attractive new target for attackers. For my Master’s thesis, I implemented a safer FIDO2 security key that I call Plat. Plat is a new implementation of a FIDO2 security key that retrofits privilege separation onto an existing codebase in order to prevent possible bugs from compromising the security of the key. Plat uses a new WebAssembly-based toolchain to create isolation domains on our embedded ARM platform while minimizing the need to write new code. In addition to isolating software-only libraries, Plat’s toolchain enables privilege separation of drivers by controlling access to individual hardware peripherals.

This post talks about the work completed in my Master’s thesis. For more details, you may want to look at the thesis itself.

Context

While passwords are in widespread usage due to their convenience and implementation simplicity, they have a long history of security issues, from weak passwords and common password reuse to phishing vulnerability and server database leaks. FIDO2’s WebAuthn standard, which was formed by a group of tech companies like Google and Apple and is quickly gaining momentum as an authentication standard, aims to solve many of these issues by replacing passwords with an automated public-key signature exchange. In doing so, FIDO2 removes the need for users to manually remember and enter passwords, and thus eliminates many of passwords’ inherent security risks, by reducing the security of web-based authentication to the security of a user’s private key. There have been previous attempts to replace passwords, but FIDO2 seems to be the one that’s gaining steam: Apple has added authenticators to their devices and Google recently enabled WebAuthn as a password replacement for Google accounts.

Though companies like Apple are embedding authenticators into their devices, authentication via WebAuthn and its U2F predecessor today is often done via physical security keys like the YubiKey. In addition to a familiar lock-and-key mental model, these physical security keys can provide strong security: since these devices focus on a single task, their codebase can be much smaller than the millions of lines that comprise a modern PC’s operating system, drivers, and applications, and therefore can have far fewer bugs and vulnerabilities. However, security keys and similar devices like hardware cryptocurrency wallets have still had many bugs. Careful review can remove many of these bugs, as can replacing the C used in their source code with a memory-safe language like Rust. But ultimately it is very difficult to find and fix every bug in a system, leaving the door open to vulnerabilities. Since authenticators have such high-value contents, they are a likely attack target, and an exploit of a bug in an authenticator can compromise the account security that users rely on these authenticators to provide.

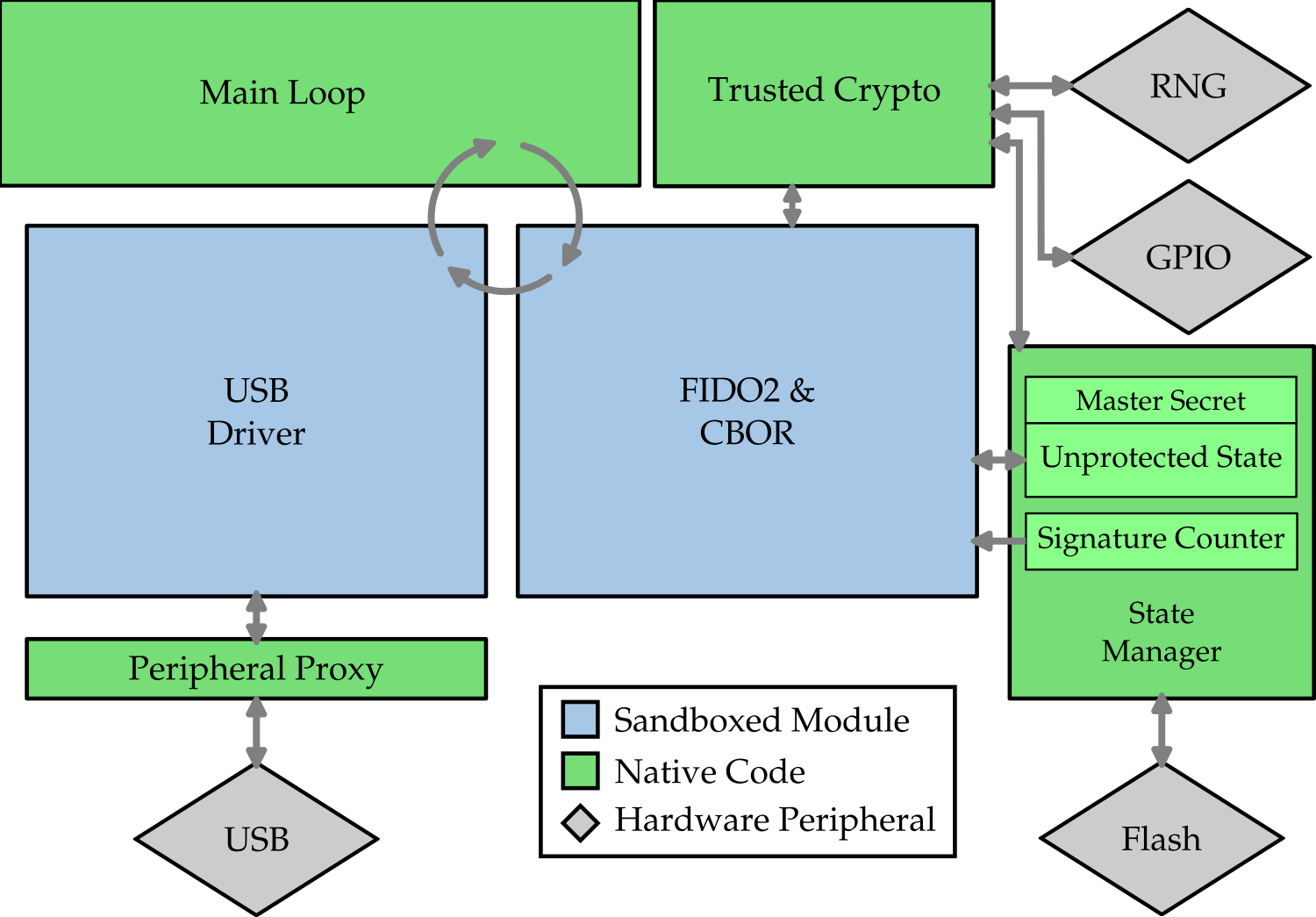

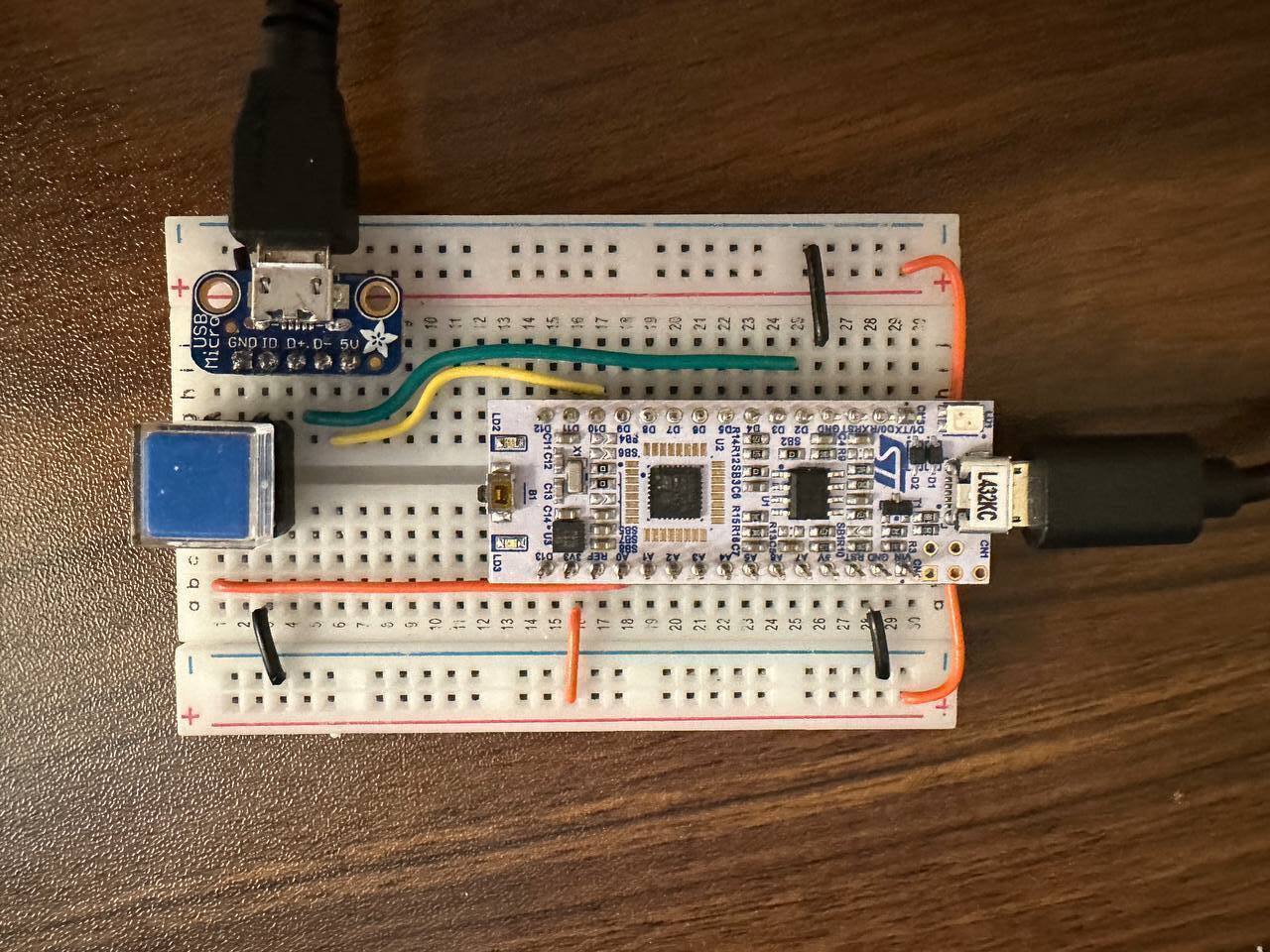

For my recently-completed Master’s thesis, I designed and implemented a security key that I call Plat. Plat implements the same functionality as existing security key, but uses privilege separation to limit the damage that many types of bugs can cause. Plat adds this privilege separation to the open-source SoloKey codebase, using the same basic hardware components (seen below on the breadboard I used to prototype) and minimizing new code to be written.

Threat Model & Security Goals

The security of the FIDO2 protocol relies fundamentally on the security of each user’s secret keys. If an attacker learns a user’s secret key, they can forge the cryptographic signatures at the protocol’s core and pretend to be the user. In order for the FIDO2 protocol to be usable, the risk of secret key compromise must be low.

Unfortunately, given the huge scale of modern PC software, computer compromises are relatively common. If secret keys were simply stored in a regular program on the user’s machine, a simple piece of malware would allow an attacker permananent access to all of a user’s accounts.

Implementing the authenticator as a standalone device like a YubiKey offers some protection against a compromised host PC: while an attacker who compromises a user’s PC would be able to trivially read secret keys from an authenticator implemented as an application, a hardware authenticator that is attached via a USB port can still enforce its own restrictions even when the host PC is compromised. These security keys are typically designed to allow the connected host PC to send signature requests to the key and, perhaps after a required button press by a human, receive a signature in response. If the security key works as intended, the security key should never reveal the secret key to the host computer.

But an attacker who compromises a host PC still gets significant power to interact with a connected authenticator by sending arbitrary messages over USB to the authenticator. If the authenticator includes bugs that are exploitable via USB, an attacker could circumvent the protections that hardware security keys aim to provide and get unrestricted access to a user’s accounts.

Even if a user’s PC is compromised and the authenticator code includes bugs, Plat aims to ensure that these bugs cannot cause total long-lasting account compromise. Specifically, we aim to ensure that the secret key is not revealed and that each signature performed has the explicit approval of the user.

Plat’s Approach: Privilege Separation

Plat uses privilege separation to limit the damage caused by many classes of bugs and achieve these goals. Used in security-critical software like browsers, privilege separation is a powerful technique that involves splitting a system into several components and placing restrictions on each component that give it permission to perform only actions that are strictly necessary for its function. For effective privilege separation, each component must be placed in a sandbox that limits its interaction with other components and with the host environment that coordinates all of the components. Except as explicitly allowed by the host environment’s configuration, these sandboxed components should not be able to affect the execution of anything outside of their sandbox: a component should not be able to access memory not owned by that component, and a component should not be able to jump to code that is not part of that same component. We call these sandboxed components modules.

The choice of the boundaries of these modules defines the security of a privilege-separated system. With all code in a single module, privilege separation gives no benefits—code within a module is not protected from other code within that same module. But with too-small modules, it may be necessary for a module to expose a wide API, increasing its vulnerability exposure and increasing overall complexity. To be effective, modules must be chosen to encapsulate a given functionality with a narrow API, to meaningfully separate components based on the privileges they require, and to separate bug-prone code from the code we aim to protect.

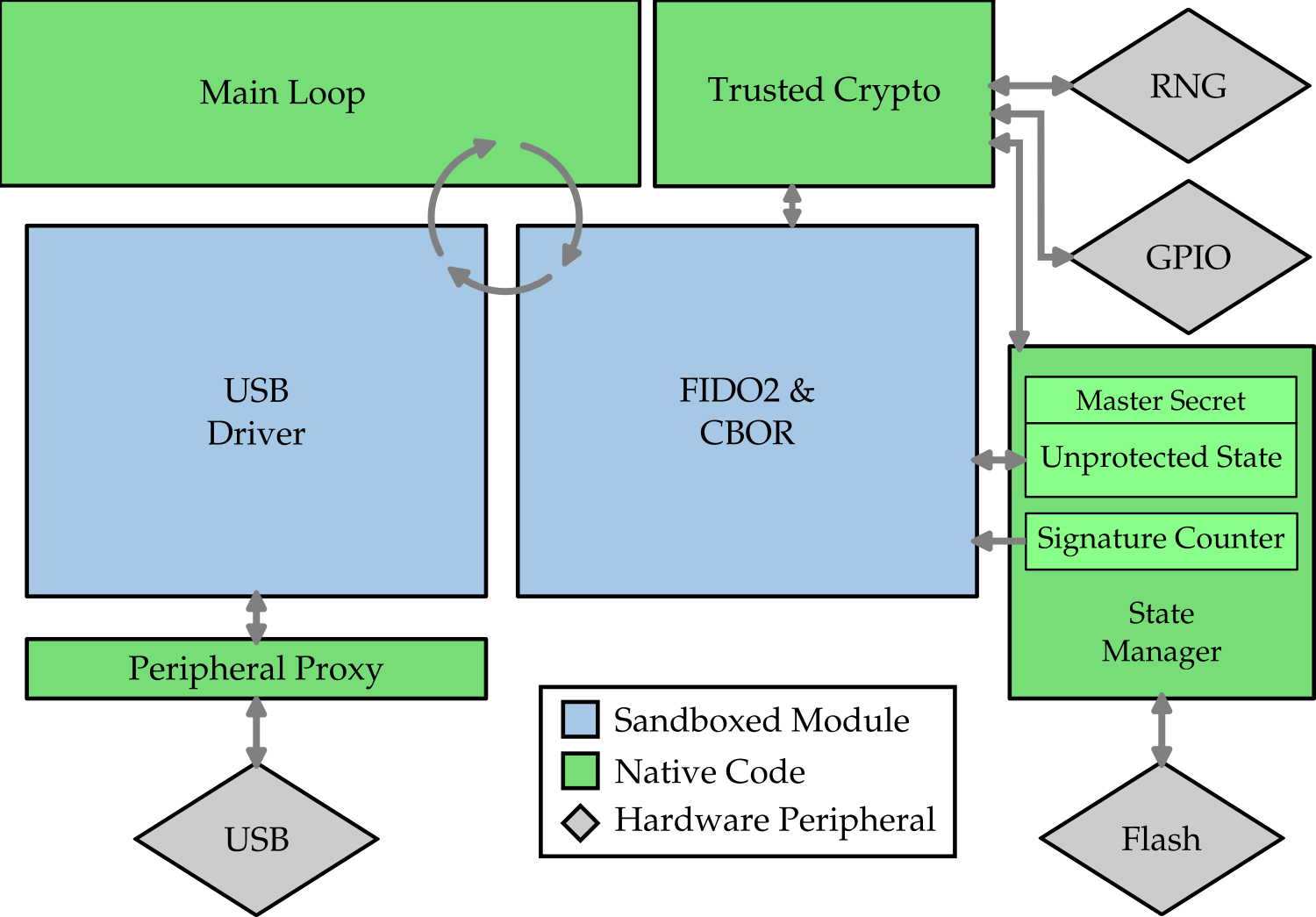

We expected most bugs to reside in the most complex pieces of the codebase. First, the USB stack seemed like a likely source of bugs: drivers historically have been responsible for many bugs, including a majority of bugs in the Linux kernel, and USB has been a source of bugs on embedded devices in the past. Parsers are also notoriously bug-prone, and we anticipated that the FIDO2 processing logic, which includes a CBOR parsing library, may be another source of bugs. As seen above, Plat places each of these bug-prone components into their own module, restricting their access to hardware resources and to sensitive cryptography state. This way, even if the USB stack or the CBOR parser has a bug that allows an attacker to gain control of that module, the attacker still will not be able to read out the secret key since the privilege separation system does not provide this modules with any way to do so.

Isolating Modules with WebAssembly

We decided to implement the isolation that we needed using WebAssembly. While WebAssembly, as the name implies, was designed to run in the browser, its isolation guaratees are useful far beyond the web. In order to support the execution of code shipped from an untrusted web server, Wasm is built around the concept of sandboxed modules. The language ensures that WebAssembly code cannot affect the host environment except through a clearly defined API. And unlike other sandboxing and isolation solutions, WebAssembly does not rely on an operating system to provide a process abstraction or on fancy processors to provide page table hardware—neither of which were an option for Plat.

WebAssembly provides the isolation and OS-independence that we need for privilege separation out of the box. Somewhat less straightforward was building a system to build and run multiple WebAssembly modules to run together on the ARM microprocessor that Plat is based on.

Runtimes and compilers like wasm3 and

aWsm support ARM processors like the one we

use for Plat, but require including an interpreter in the case of aWsm or

working with generated binaries in the case of aWsm. Instead, we use a toolchain

similar to the one used in

Firefox

to sandbox untrusted libraries using wasm2c and to add the features we need.

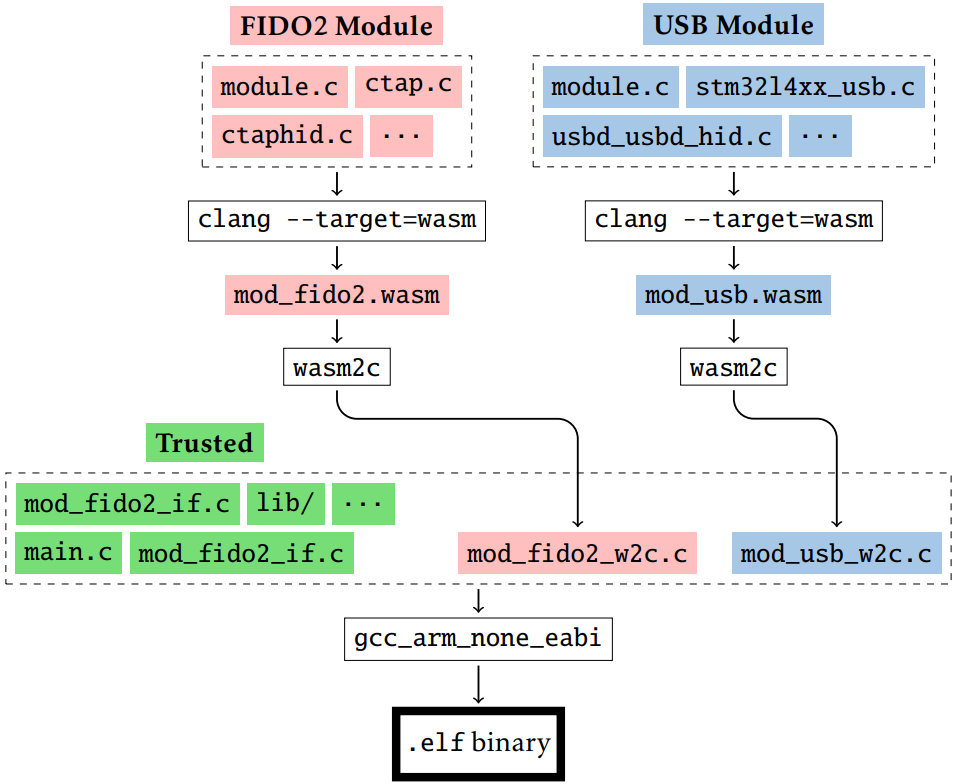

As seen above, Plat’s toolchain compiles C code into several WebAssembly modules

before translating them back into more C code with added safety checks to

preserve Wasm’s isolation guarantees. Unlike WebAssembly, C itself does not

include any safety checks or make any isolation guarantees. To preserve

isolation between modules, we trust wasm2c to insert the necessary checks into

the generated C code. A nice benefit of this C-based toolchain is

that it makes linking to and between our modules very simple. For each function

that a module imports or exports, wasm2c generates function declarations that

the host environment can then import as normal C functions—no manual ABI

translation required. It also makes our compilation and linking process simple:

once we have the C code that wasm2c generates, the final binary generation is

exactly the same as if we were compiling any monolithic C application.

This toolchain allows us to create sandboxed modules from existing C code that each have their own memory space and a statically defined set of jump targets, ensuring that each module’s code escape its sandbox. Further, modules are able to import and export functions to interact with each other and with functions defined in the host environment. But the toolchain does not itself provide the safe access to peripheral MMIO that we require for the USB driver or the safe access to cryptography that the FIDO2 module needs.

Making It All Work

The set of constructs provided by WebAssembly means that it does not rely on hardware support and thus makes it a compelling mechanism to implement privilege separation in Plat. But, like any isolation strategy, it comes with some challenges. Implementing Plat involved designing several new constructs to enable passing of large arguments across module boundaries, to allow sandboxing the USB driver that requires access to peripheral memory, and to enable the FIDO2 module to perform signatures while ensuring that it cannot learn, modify, or abuse the authenticator’s secret key.

Protecting Secret State

Plat’s design aims to sandbox as much of the FIDO2 parsing and logic code as possible in order to guarantee that bugs in that code cannot read, modify, or use the secret key except as allowed. This is complicated by the fact that the FIDO2 code necessarily needs to perform signatures and other cryptography using this secret key. To address this, Plat’s design separates the core FIDO2 parsing and logic from the code that accesses the secret key and uses Plat’s State Manager to ensure that the isolated FIDO2 code cannot learn the secret key.

Plat separates the cryptography code into two pieces. Cryptography code that

does not require access to the secret data, such as simple SHA256 hashes, is

part of the sandboxed code. Cryptography functions that do use the secret key,

however, such as signature and HMAC code, run in the trusted host environment

and are available to the FIDO2 module only as an import. We call these functions

with access to the secret key “trusted crypto” functions, and their API is

designed to allow the module to learn signatures and MACs that use the secret

key, but to prevent the module from learning the secret key itself. To get a signature over

the data required to perform a FIDO2 authentication, for example, the FIDO2

makes a call to t_crypto_ecc256_sign() and recieves the signature in response.

The secret key is automatically supplied by the host environment without the

module ever seeing it.

Placing the cryptography operations behind an API in this way also allows us to place limits on when signatures can be calculated. Before computing a signature, the trusted cryptography code will ensure that the user has pressed the authenticator’s button to approve this signature and will increment the signature counter.

By storing the secret key in a separate memory region, we can ensure that sandboxed code cannot access it while running. However, security keys are designed to be unplugged and moved between computers frequently. To enable this, the secret key is stored along with all other authenticator state in persistent flash storage. Instead of allowing the FIDO2 module to access this flash storage directly and potentially read or modify the secret key, Plat’s state manager interposes on requests to read the state from flash and masks out the secret key. As illustrated below, the state manager allows only the trusted cryptography code to read the secret key from flash. The state manager also provides the FIDO2 module with only read-only access to the signature counter, ensuring that it cannot be corrupted by a bug in the module.

Sandboxing the majority of the FIDO2 code while leaving the trusted cryptography functions in the trusted host, along with using the state manager to provide safe access to persistent memory, enables Plat to perform all the cryptography functionality required to perform its job while still ensuring that a bug in the FIDO2 parsing and logic code cannot allow an attacker to learn the secret key.

Providing Safe Imports

WebAssembly’s function call interface allows only integer arguments and return types to imported and exported functions that cross the module boundary. However, Plat needs to send more complex binary datatypes across the boundary. For example, the USB stack sends and receives 64-byte packets, and the FIDO2 module requests and receives signatures over arbitrary-length data blobs. To allow for non-integer arguments and return types, Plat uses pointers.

In order to preserve isolation, WebAssembly modules do not have direct access to system memory. Instead, each WebAssembly module has a dedicated “linear memory” region. It is not possible for a module to access memory outside of this dedicated region. Because of this, pointers in the global memory space—that is, memory addresses—are not the same as pointers within module memory—we call these offsets. Plat’s design includes machinery to translate between host memory addresses and module memory offsets and vice versa.

But we must be careful not to break isolation when we do this translation. In

particular, it is not safe to blindly translate module-provided offsets into

host memory addresses and pass the addresses to host functions. For instance,

the FIDO2 module imports the function usbhid_send to send packets to the host

PC. Thanks to our pointer-based approach, the function available inside the

module C code looks exactly like it would without any privilege separation at

all:

void usbhid_send(uint8_t * msg);

wasm2c’s machinery generates a function declaration Z_envZ_usbhid_send that

must be implemented by the host environment to provide the FIDO2 module with

this import. To provide this import, the host definition calls a translation

function translateGuestAddr, which also runs in the host environment, to

convert the guest offset provided by the module into a physical memory address.

With the physical memory address of the data the module intends to pass as an

argument, the host definition then calls the underlying function call with the

true physical memory address of the argument. (In this case, usbhid_send is

routed eventually to the USB module, but that does not matter for this example.)

void Z_envZ_usbhid_send(struct Z_env_instance_t* env, u32 msgOffset) {

uint8_t * msgAddr = translateGuestOffset(env, msgOffset, PKT_BUF_SIZE)

return usbhid_send(msgAddr);

}

If this translation is naive and does not consider the length of module memory,

an attacker who gains control of the FIDO2 sandbox could use this to read out

the secret key! By manipulating the msgOffset argument to extend beyond the

end of module memory so that the resulting msgAddr points to the beginning of

the secret key, the attacker can cause the secret key to be sent over USB to the

compromised host PC.

To avoid this type of sandbox escape, the translateGuestOffset function is

carefully written to ensure that the offset provided by the module actually

falls within the bounds of the module’s memory. For operations that will read

multiple bytes, the translation function also takes the maximum number of bytes

to be accessed (PKT_BUF_SIZE above) and verifies that the entire region that could

be accessed is within module memory. By using this

translateGuestOffset every time a module offset needs to be converted to a

physical memory address, we ensure that all translations are checked and that a

module cannot trick an imported function into violating the sandbox on its

behalf. This technique is used for every import with blob-type arguments in Plat.

Sandboxing Device Drivers

Since drivers are common sources of bugs, we sought to sandbox the USB driver. But drivers typically require bare-metal access to the hardware: their job is to provide an abstraction over the memory-mapped IO provided by the peripheral.

However, the only memory accessible to a module directly is the module’s dedicated linear memory region. When module code dereferences a pointer, the pointer’s address is interpreted as an offset into this memory region and the dereference fails if the offset falls outside of the allocated memory region. This memory isolation is an integral part of WebAssembly’s sandboxing plan, but precludes MMIO access by drivers via WebAssembly load and store instructions: an attempted access to a specific MMIO address would be converted into nonsense and prohibited by WebAssembly’s guarantees.

Instead, Plat provides each module with access to what we call the peripheral proxy. This peripheral proxy comprises a set of imported functions that allow a module to access an explicitly defined set of physical memory addresses. For example, if the driver needs to read or write a 32-bit word to an MMIO register, it can use the following functions:

uint32_t Z_envZ_memReadWord(Z_env_instance_t* env, uint32_t addr) {

if (checkAddr(env, addr))

return (uint32_t) *(uint32_t *) addr;

trap();

return 0;

}

uint32_t Z_envZ_memWriteWord(Z_env_instance_t* env, uint32_t addr, uint32_t value) {

if (checkAddr(env, addr)) {

*((uint32_t *) addr = value;

return 0;

}

trap();

return 0;

}

Parallel functions exist for 8-bit and 16-bit widths. Importantly, these functions first

check that the module is allowed to access the given addr and then, if

permitted, perform the requested read or write. This permission check is of

crucial importance: if we omitted the permission check and simply allowed the

module to read and write an arbitrary physical memory address, the sandboxed

driver could escape its sandbox by reading secret state or overwriting memory to hijack control

flow of the host environment. Plat’s peripheral proxy inspects the

module-provided address before accessing it to ensure that the address

corresponds to the peripherals that the driver is responsible for. To minimize

the opportunity for errors and to ease development, Plat includes infrastructure

to generate the checkAddr function that performs this check from a YAML file

that specifies the individual peripheral memory regions and the modules that are

allowed to read each:

device-classes:

- name: RCC

allowed-regions:

- start: 0x4002_1000

end: 0x4002_13FF

- name: PWR

allowed-regions:

- start: 0x4000_7000

end: 0x4000_73FF

- name: USB

allowed-regions:

- start: 0x4000_6800

end: 0x4000_6BFF

- start: 0x4000_6C00

end: 0x4000_6FFF

modules:

- name: USBHID

allowed-classes:

- USB

- RCC

- PWR

- name: FIDO2

allowed-classes: []

From this specification, Plat’s infrastructure generates the checkAddr

function definition, which simply translates the regions that each module is

allowed to access into a series of conditionals that return true if an access

is permitted.

bool checkAddr(struct Z_env_instance_t * env, uint32_t addr) {

switch (env->module_class) {

case MODULE_CLASS_USBHID:

return (

// USB

(addr >= 0x40006800 && addr < 0x40006bff) ||

(addr >= 0x40006c00 && addr < 0x40006fff) ||

// RCC

(addr >= 0x40021000 && addr < 0x400213ff) ||

// PWR

(addr >= 0x40007000 && addr < 0x400073ff)

);

break;

case MODULE_CLASS_FIDO2:

return (

false

);

break;

default:

return false;

break;

}

}

Plat does not permit any peripheral access for the FIDO2 module, which performs only parsing and logic, but does permit access to the USB, PWR, and RCC peripheral memory for the USB module, which includes the entire USB stack. PWR and RCC, which control power and clock gating to all of the chip’s peripherals, is necessary to allow the USB driver to configure the USB peripheral on startup. A future iteration could allow the specification of bit-level access control over these shared configuration regions to ensure that the USB module can access only the fields of the RCC and PWR registers that correspond to the USB peripheral.

Plat’s peripheral proxy allows us to adapt existing device drivers to work in a sandboxed environment. To adapt the standard USB driver used in the original Solokey code to work in our sandbox, the only change necessary is to replace peripheral memory accesses with calls to the imported peripheral proxy functions.

This setup allows Plat to sandbox its complex USB device driver, ensuring that bugs in the driver and USB stack cannot compromise the security of the entire security key.

Conclusion

Plat’s privilege separation approach allows it to preserve the confidentiality and integrity of the secret key and prevent signature abuse even in the presence of exploitable bugs in the bug-prone code contained in the FIDO2 and USB modules. While Plat does not protect the system from all bugs, it does provide strong security guarantees for many likely classes of bugs, and it does so with reasonable performance overhead: the slowest step of an authentication increases from 277ms to 600ms. For an authentication operation that a user performs a few times a day at most, this increase is insignificant (not to mention that many users today spend several minutes trying to guess the password they used for each service).

Privilege separation has the capability to convert devastating bugs into trivial ones by means of “damage control”, but is difficult to implement effectively. Plat explores privilege separation in the new context of embedded devices, addressing the unique challenges that come with bare-metal execution and demonstrating that for many applications the overhead that comes with sandboxing is reasonable. We hope to see more systems adopt privilege separation as an additional layer of defense and hope for improved tools to make it easy to do so in the future.